The terminology that goes with understanding the most basic concepts in computer technology can be a real deal breaker for many technological neophytes. An area that frequently trips people up are those terms that are used to measure computer data. Yes, we’re talking about bits, bytes and all their many multiples. This is an important concept for anyone who works in depth with a computer, because these measurements are used to describe storage, computing power and data transfer rates.

Here is a simple explanation about what these measurements mean.

What’s a Bit?

Any basic explanation of computing or telecommunications must start with a bit, or binary digit. This is the smallest amount of digital data that can be transmitted over a network connection. It is the tiniest building block of each and every email and text message you receive. A bit is a single unit of digital information and represents either a zero or a one. The use of bits to encode data dates way back to the old punch card systems that allowed the first mechanical computers to perform computations. Binary information that was once stored in the mechanical position of a computer’s lever or gear is now represented by an electrical voltage or current pulse. Welcome to the digital age! (Learn more about the old days in The Pioneers of Computer Programming.)

What’s a Byte?

If you’re confused by the difference between bits and bytes, you’re not alone. In fact, the term “byte” got its official spelling as a result of concerns that “bite” would accidentally (and incorrectly) be shortened to “bit.” Unfortunately, the spelling change didn’t clear up all the confusion.

A byte is a collection of bits, most commonly eight bits. Bits are grouped into bytes to make computer hardware, networking equipment, disks and memory more efficient. Originally, bytes were created as eight bits because the common physical circuitry at the time had eight “pathways” in and out of processors and memory chips. At any one point in time, the gateway to one of these components could have an “off” or “on” condition at each of the eight pathways.

When Metric Meets Binary

Pretty simple, right? Not so fast. When it comes to computers, terms such as kilobyte (KB), megabyte (MB), gigabyte (GB) – and the abbreviations that go along with them – have slightly different meanings. Bits and bytes do not round nicely into the decimal numbering system. Bits and bytes are based on binary, while the decimal system is based on factors of 10 (base 10). So, a kilobyte is actually 1024 bytes – not 1000 bytes that the kilo prefix suggests. If this doesn’t make sense, do the math yourself: 2^10 = 1024.

This differs from most other contexts in computer technology, where terms prefixed by kilo, mega and giga, etc., are used according to their meaning in the International System of Units (SI), which is to say, as powers of 1000. This means that a 500 gigabyte hard drive holds 500,000,000,000 bytes.

Said another way, SI units are based on factors of 10. Computers, on the other hand, operate on a binary number system, which is a factor of two. So, binary measures of bits and bytes differ because they are based on a different numbering system.

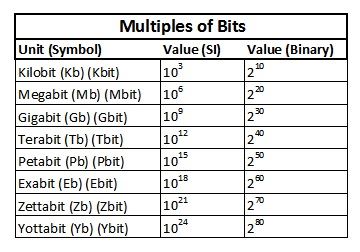

Multiples of Bits

Of course, the tables above go far beyond yotta – but so far, our technology doesn’t extend much beyond peta. Terabit Ethernet is considered the next frontier of Ethernet speed above the 100 gigabit Ethernet that’s currently possible. Beyond that, greater rates of data transfer are mostly theoretical.

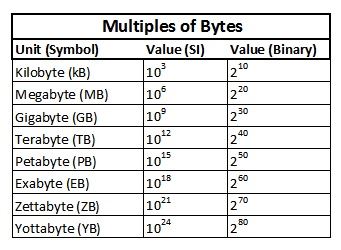

Multiples of Bytes

As with bits, this chart can technically continue indefinitely, but most of those measures would be theoretical. Multi-terabyte (TB) hard drives are becoming more common on the consumer side, while petabyte (PB) storage exists for servers, research facilities and data centers. Beyond that, the higher multiples have, thus far, not been applied in the real world.

The Problem With Abbreviations

As you might have guessed, based on the tables above, the abbreviations for multiples of bits and bytes also create confusion. Because the abbreviations are so similar, they are often used interchangeably, but this is not the correct approach. A megabyte and megabit are very different things, but the simple capitalization of one letter can leave readers at a loss. This is why bits have a second set of abbreviations (Kbit, Mbit, etc.) – a version that was developed for the sake of clarity.

Where Things Get Confusing

So let’s say you buy a memory upgrade for your computer. It’s advertised as being 128 megabytes. But then you notice that the data sheet for the product says that you have a module with 64 megabit parts. Were you ripped off?

Nope. Here’s why: To make a 128 megabyte module, your memory module uses 16 units of 64 megabits, each of which calculates to 16 units x 64 megabits/8 bits per byte = 128 megabytes.

That’s It?

Confusion about bits and bytes is common. The key is to understand how each relates to each other and whether binary (base 2) or decimal (base 10) usage describes the digital data. Becoming familiar with these basic concepts is a way to become more familiar with your computer and all its related components.