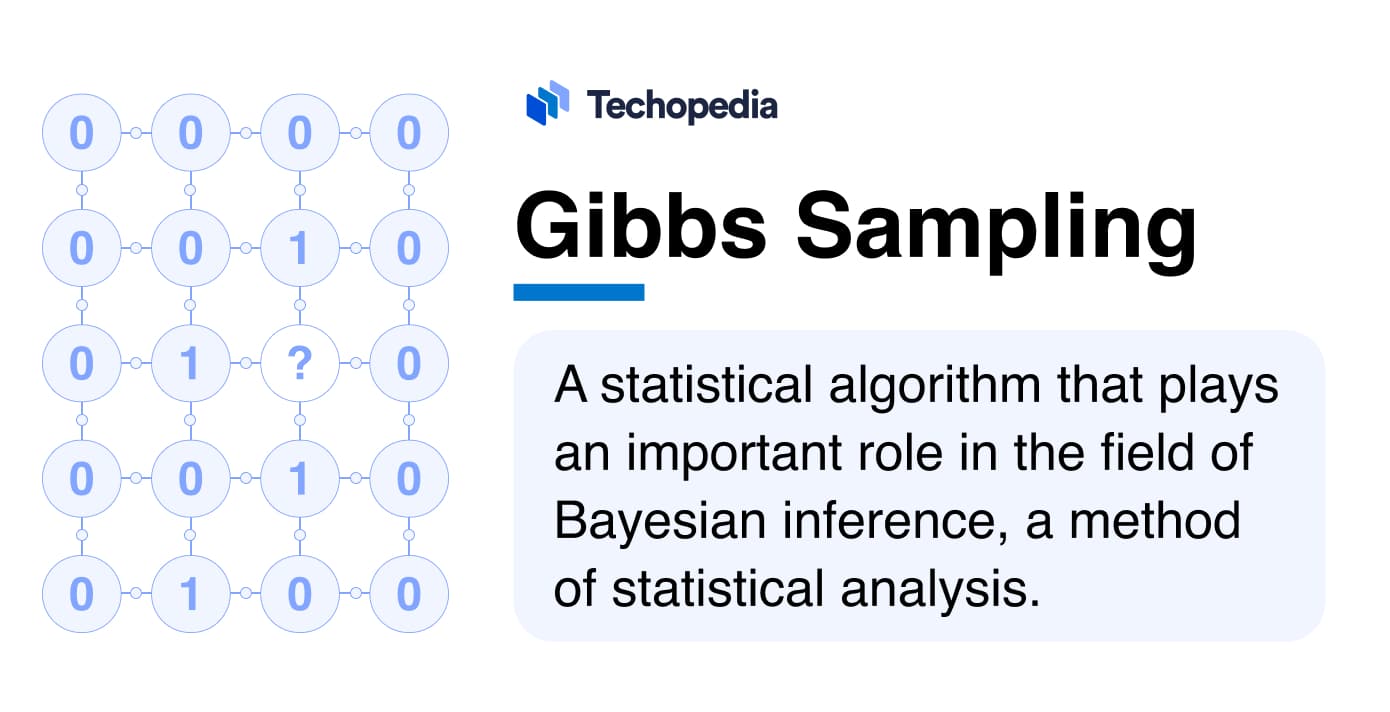

What is Gibbs Sampling?

Gibbs Sampling is a statistical algorithm that plays an important role in the field of Bayesian inference, a method of statistical analysis. It helps to understand and make predictions based on complex data. It is a technique that allows statisticians and data scientists to draw conclusions from data sets that are otherwise too intricate to analyze using traditional methods.

The Gibbs Sampling algorithm is especially valuable in data science and machine learning (ML). In these fields, Gibbs Sampling helps in understanding and predicting data patterns. This is obviously becoming increasingly important today, as we live in an era where data volumes are constantly growing at an exponential rate.

So, why is Gibbs Sampling so important? In Bayesian inference, we often deal with probabilities that are not easily calculable due to the complexity of the data. This is where Gibbs Sampling shines.

It simplifies the process by breaking down these complexities into smaller, more manageable pieces. By iteratively updating the estimates of the variables at play, Gibbs Sampling provides a practical way to approximate these elusive probabilities.

Techopedia Explains the Gibbs Sampling Meaning

Gibbs Sampling has a large range of applications, practically speaking. It’s used in fields as diverse as marketing, where it can predict consumer behavior, and in pharmaceuticals, for drug development. In machine learning, it enhances algorithms for pattern recognition and natural language processing (NLP), contributing a lot to the development of sophisticated AI systems.

So, when you think of Gibbs Sampling, don’t just think of it as a statistical tool, but rather a major component in the overall decision-making process.

The Historical Background of Gibbs Sampling

Gibbs Sampling has its roots in the broader framework of the Markov Chain Monte Carlo (MCMC) methods. To really understand Gibbs Sampling, you really need to grasp the basic concept of MCMC, a cornerstone in computational statistics.

MCMC methods, developed in the mid-20th century, are used for sampling from probability distributions where direct sampling is challenging. These methods generate samples by constructing a Markov chain that has the desired distribution as its equilibrium distribution. The samples from this Markov chain are used to approximate the probability distribution. MCMC methods revolutionized the field of statistical sampling by providing a way to handle complex, high-dimensional problems.

Gibbs Sampling emerged as a specific instance of the MCMC method. It was introduced by the American biostatisticians Stuart and Donald Geman in 1984, primarily for image processing applications. The core idea behind Gibbs Sampling is relatively simple: instead of sampling from a high-dimensional distribution directly, which is often computationally intensive, it samples sequentially from a series of conditional distributions. This process simplifies the sampling task significantly.

The algorithm iteratively samples from each variable’s conditional distribution, given the current values of the other variables. These iterative updates eventually lead the Markov chain to converge to the target distribution, from which the samples are drawn. This approach is particularly useful when dealing with multivariate distributions, making it easier to draw samples from complex models.

Gibbs Sampling fits into the MCMC framework as a particularly efficient method when the conditional distributions are easier to sample from than the joint distribution. This efficiency makes it a popular choice in various applications, from Bayesian statistics to machine learning. Its ability to handle large, complex datasets has made it an indispensable tool in the era of big data.

The Basics of Gibbs Sampling

Gibbs Sampling is a technique specifically designed to sample from a multivariate probability distribution, which is a distribution involving two or more random variables. Its primary function is to facilitate the exploration and understanding of complex, multi-dimensional distributions that are common in statistical analysis and data science.

Here’s a deeper look into the fundamentals of Gibbs Sampling.

How Gibbs Sampling Works

This process involves initializing variables, iteratively updating them, and aiming for convergence to the target distribution.

Here’s an overview of the step-by-step process involved in Gibbs Sampling.

Begin by setting initial values for all the variables involved in the model. These initial values can be random or based on informed estimates. This step is important as it forms the starting point for the iterative process.

Variable Selection: At each step, focus on one variable while keeping the others fixed. This selective focus allows for a detailed exploration of each variable’s distribution in the context of the others.

Sampling from Conditional Distribution: For the selected variable, sample a new value from its conditional distribution. This conditional distribution is defined based on the current values of the other variables in the model.

Updating Variables: Replace the current value of the selected variable with the newly sampled value. This update reflects a new point in the distribution space, considering the interdependencies among variables.

Repeating the Process: Move to the next variable and repeat the sampling and updating process. Cycle through all variables in a systematic manner.

Iteration: Continue this cycle of updating each variable in turn, gradually exploring the distribution space.

Monitoring Convergence: As the process iterates, monitor the sequence of samples. Over time, these samples should start reflecting the characteristics of the target distribution.

Burn-in Period: The initial phase of the sampling process is known as the ‘burn-in’ period, where the samples might not accurately represent the target distribution. After this period, the samples are expected to converge to the target distribution.

Determining Convergence: Convergence is typically assessed through diagnostic methods or visually inspecting the trace of the samples. Once convergence is achieved, the samples can be used for statistical inference.

The Mathematical Foundation of Gibbs Sampling

Gibbs Sampling is based on the concept of conditional probability in multivariate distributions. It simplifies the process of sampling from a complex joint distribution by focusing on one variable at a time, given the current states of the others.

In a set of variables, such as X=(X1, X2, …, Xn), Gibbs Sampling focuses on the probability of one variable given the current values of the others. This is mathematically denoted as P (Xi | X-i), where X-i represents all variables in the set except Xi.

For example, if you have three variables X1, X2, X3, when updating X1, Gibbs Sampling looks at P (X1 | X2 X3).

The algorithm cycles through each variable, updating it based on the conditional distribution, which is influenced by the latest values of the other variables.

One fundamental assumption of Gibbs Sampling is the ergodicity of the Markov chain it creates. Ergodicity ensures that the process will converge to the stationary distribution, irrespective of the initial values of the variables.

The method also assumes that the conditional distributions of each variable are known and can be sampled. This is necessary for the algorithm to work effectively.

Applications of Gibbs Sampling in Various Fields

Gibbs Sampling is an indispensable tool in numerous fields, each leveraging its ability to analyze complex data sets and model uncertain outcomes. Here are some examples of its applications in machine learning, bioinformatics, finance, and environmental science.

Machine Learning

In machine learning, Gibbs Sampling is especially valuable in Bayesian networks, where it’s used for both parameter estimation and handling missing data. This method plays a role in training algorithms for complex tasks like image recognition and natural language processing (NLP).

For example, in image recognition, it helps in deciphering intricate patterns from pixel data, important for applications ranging from facial recognition to medical imaging.

In NLP, Gibbs Sampling contributes to the development of more sophisticated models by enhancing their ability to understand and predict language patterns.

Bioinformatics

Gibbs Sampling finds extensive use in bioinformatics, particularly in genomics. It’s a key tool for sequence alignment, assisting in aligning DNA sequences from various sources to identify genetic similarities and differences. This application is needed for evolutionary biology studies and in identifying genetic markers of diseases.

Also, in genetic association studies, Gibbs Sampling helps in exploring the relationship between genetic variations and specific diseases, assisting researchers to pinpoint genetic risk factors.

Finance

In finance, Gibbs Sampling is used for risk assessment and portfolio optimization. It models the inherent uncertainty in financial markets, providing insights into potential market risks through scenario simulation.

This aspect of Gibbs Sampling is invaluable for investors looking to optimize their portfolios, as it helps in modeling expected returns and asset volatilities, leading to more informed investment strategies.

Environmental

In environmental science, especially in climate modeling and risk assessment, Gibbs Sampling helps in modeling the complex interactions within the climate system, allowing for the simulation of various climate scenarios. This capability is really important for predicting future environmental changes and understanding their potential impacts.,

Also, Gibbs Sampling is used in environmental risk assessments, such as evaluating the consequences of pollution on ecosystems or understanding the effects of large-scale environmental changes like deforestation.

Advanced Concepts and Extensions of Gibbs Sampling

Gibbs Sampling, while powerful in its standard form, has given rise to several advanced variations and extensions. These developments enhance its usage and effectiveness, especially in more complex statistical scenarios.

Also, Gibbs Sampling maintains a strong connection with other statistical techniques and concepts, contributing to a broader understanding of statistical analysis and computational methods.

The Bottom Line

Gibbs Sampling is a key statistical tool used for sampling from complex multivariate distributions. Its iterative approach of updating each variable based on conditional probabilities allows for efficient analysis of high-dimensional data.

This method is widely applied in fields like machine learning for parameter estimation, bioinformatics for genetic studies, finance for risk assessment, and environmental science for climate modeling – just to name a few.

Its ability to handle intricate datasets and integrate with other statistical methods underlines its importance in today’s data-driven society.

FAQs

What is Gibbs sampling in simple terms?

What are the advantages of Gibbs sampling?

For what type of sequences Gibbs sampling is used?

What are the applications of Gibbs sampling?

References

- A Gentle Introduction to Markov Chain Monte Carlo for Probability (Machinelearningmastery)

- Gibbs sampling (Dotnet.github)

- Collapsed Gibbs Sampling for Mixture Models (Dp.tdhopper)

- Adaptive Gibbs Samplers and Related McMc Methods (Arxiv)

- Statistical Thermodynamics Professor Dmitry Garanin (Lehman)