What is Generative AI?

Generative AI (genAI) is a broad label describing any type of artificial intelligence (AI) that can produce new text, images, video, or audio clips. Technically, this type of AI learns patterns from training data and generates new, unique outputs with the same statistical properties.

Generative AI models use prompts to guide content generation and use transfer learning to become more proficient. Early genAI models were built with specific data types and applications in mind. For example, Google’s DeepDream was designed to manipulate and enhance images. It can produce engaging, new visual effects, but the model’s development was primarily focused on image processing, and its capabilities do not apply to other types of data.

The field of generative AI is evolving quickly, however, and an increasing number of generative AI models are now multimodal. This advancement means the same model can handle different data prompts and generate different data types.

For example, the same genAI model could be used to:

- Generate creative text

- Generate informational text

- Answer any type of question in a comprehensive and informative way

- Describe an image

- Generate a unique image based on a text prompt

- Translate text from one language to another

- Include the source of the model’s information in a response

Generative AI model development is often a collaborative effort requiring different research types, programming, user experience (UX), and machine learning operations (MLOps) expertise. A multidisciplinary approach helps ensure that generative AI models are designed, trained, deployed, and maintained ethically and responsibly.

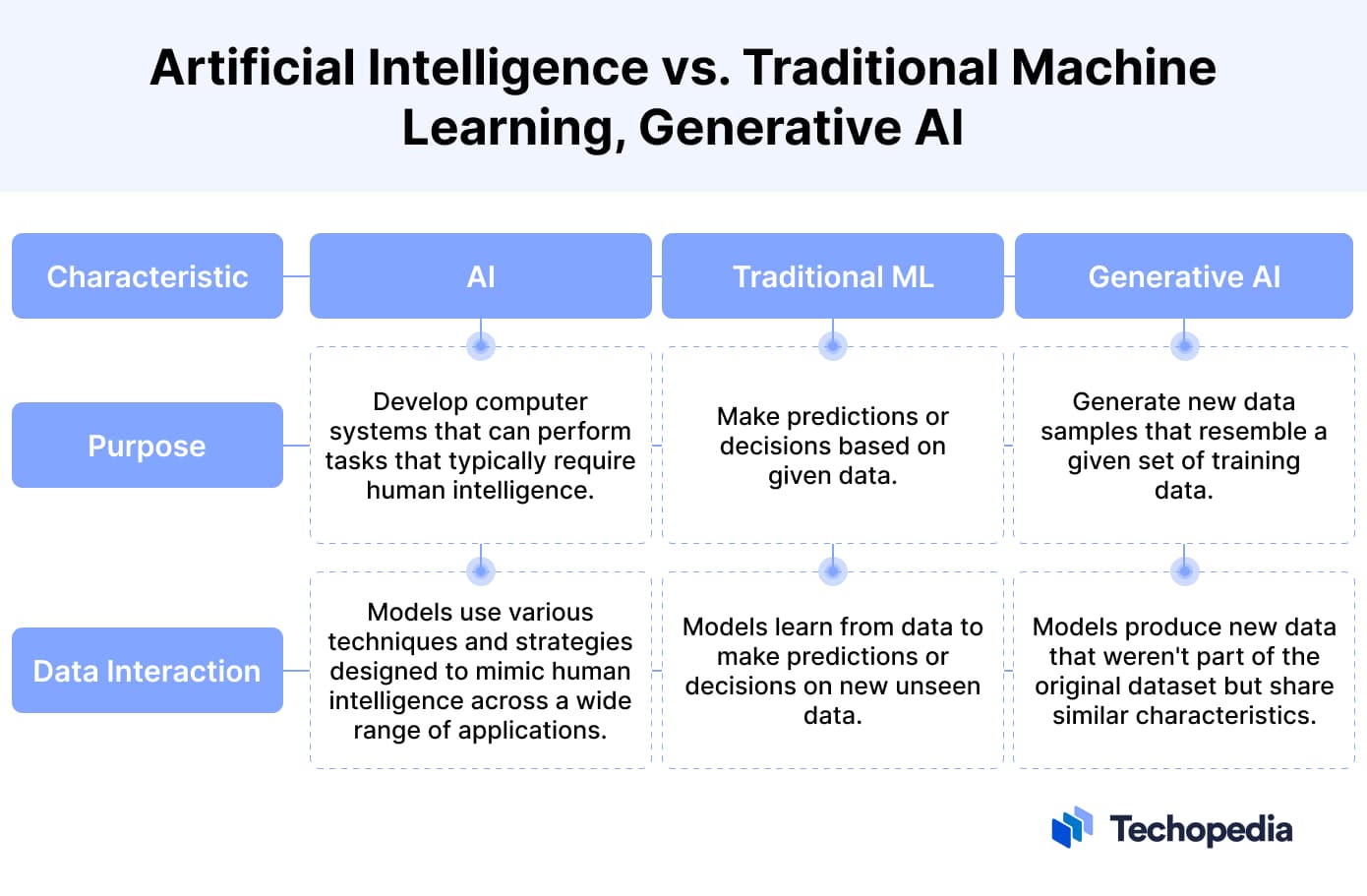

Generative AI vs. Traditional AI

Essentially, the relationship between artificial intelligence and generative AI is hierarchical.

- AI refers to the development of computer systems that can perform tasks that previously required human intelligence. Typically, such tasks involve perception, logical reasoning, decision-making, and natural language understanding (NLU).

- Machine learning is a subset of AI that focuses on discriminative tasks. It involves the development of algorithms that enable computers to make predictions or decisions based on data without being explicitly programmed how to do so.

- Generative AI is a subset of machine learning (ML) that focuses on creating new data samples that resemble real-world data.

Traditional AI involves rules-based machine learning algorithms trained on a single data type to perform a single task. Many traditional ML algorithms are trained to generate a single, correct output.

In contrast, generative AI uses deep learning (DL) strategies capable of learning from diverse datasets and producing outputs that fall within an acceptable range. This flexibility allows the same foundation model to be used for various tasks. For example, ChatGPT can now process image and text prompts.

The technology, a subset of ML, is already used to produce imaginative digital art, design new virtual environments, create musical compositions, formulate written content, assist in drug discovery by predicting molecular structures, write software code, and generate realistic video and audio clips.

How Does Generative AI Work?

Generative AI models use neural networks to learn patterns in data and generate new content. Once trained, the neural network can generate content similar to the data it was trained on. For example, a neural network trained on a dataset of text can be used to generate new text, and depending on the model’s input, the text output can take the form of a poem, a story, a complex mathematical calculation, or even programming code for software applications.

The usefulness of genAI outputs depends heavily on the quality and comprehensiveness of the training data, the model’s architecture, the processes used to train the model, and the prompts human users give the model.

Data quality is essential because that’s what genAI models use to learn how to generate high-quality outputs. The more diverse and comprehensive the training data, the more patterns and nuances the model will potentially be able to understand and replicate. When a model is trained on inconsistent, biased, or noisy data, it’s likely to produce outputs that mirror these flaws.

Training methodologies and evaluation strategies are also crucial. During training, the model uses feedback to adjust values within the model’s architecture (internal parameters).

The complexity of the model’s architecture can also play a significant role in output usefulness because the model’s architecture determines how the genAI processes and learns from training data.

On one hand, if the architecture is too simple, the model may struggle to capture important contextual nuances in the training data.

On the other hand, if the architecture is overly complex, the model may overfit and prioritize irrelevant details at the expense of important, underlying patterns.

Once trained, the model can be given prompts for creating new data. Prompts are how people interact with AI models and guide their output. The focus of a prompt depends on the desired output, the model’s purpose, and the context in which the model is being used. For example, if the desired output is a cover letter, the prompt might include directions for writing style and word length. However, if the desired output is an audio clip, the prompt might include directions for musical genre and tempo.

Best Practices for Writing GenAI Prompts

A prompt is an input statement or cue that guides a genAI model’s output. GenAI models use prompts to generate new, original content statistically aligned with the context and requirements specified in the prompt.

While the specific details in a prompt reflect the type of desired output, best practices for writing text, image, audio, and video prompts rely on the same basic principles.

Be Precise: The more specific and detailed the prompt, the more tailored the response will likely be.

Provide Context: Context reduces ambiguity and helps the model generate outputs meeting the prompter’s intent.

Avoid Leading Questions: It’s important to craft prompts that are objective and free from leading information.

Reframe and Iterate Prompts: If the model doesn’t return a useful response the first time, try rephrasing the prompt (or changing the base multimedia sample) and try again.

Adjust Temperature Settings: Some AI platforms allow users to adjust temperature settings. Higher temperatures produce more random outputs, and lower temperatures produce more deterministic outputs.

Limit Response Length: When seeking concise outputs, craft prompts that specify constraints, such as word or character counts for text or duration limits for audio outputs.

Experiment with Multiple Prompts: Breaking a question or instruction down into several smaller prompts or trying out different base images, audio clips, and video samples will often yield more useful outputs.

Review and Revise Outputs: Generative AI outputs should always be reviewed because most genAI responses will need to be edited before they can be used. Be prepared to spend time on this important step!

Types of Generative AI

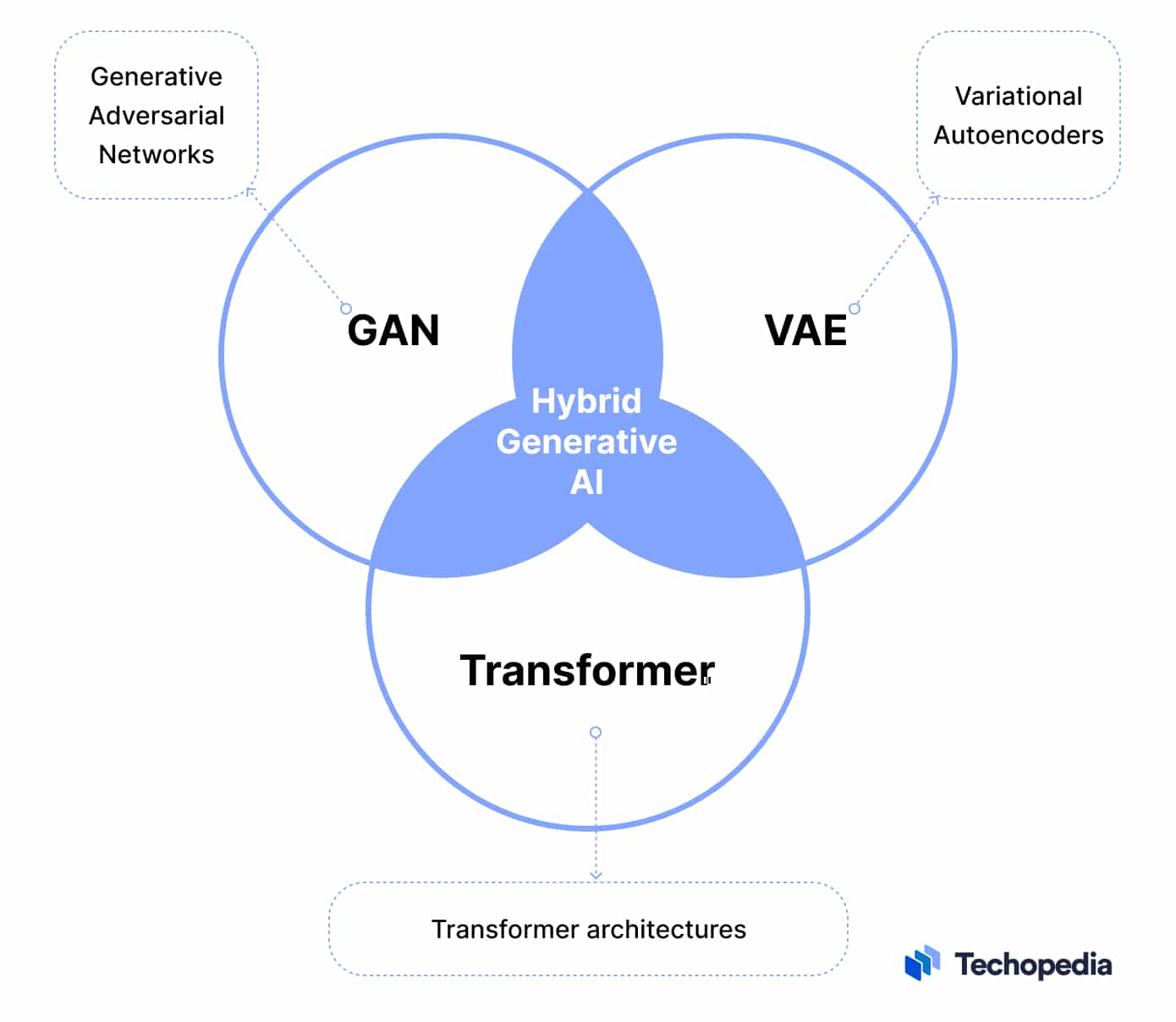

Generative AI can be applied to a wide range of tasks, and each type of task may require a different deep-learning architectural design to capture the specific patterns and features of the training data. Generative Adversarial Networks (GANs), Variational Autoencoders (VAEs), and Transformer architectures are important for building generative AI models.

Each type of architecture aims to get the AI model to a point where it can produce samples that are indistinguishable from the data it is being trained on.

Generative Adversarial Networks (GANs) consist of two neural networks: a generator and a discriminator. The two networks play a guessing game in which the generator gives the discriminator a data sample, and the discriminator predicts whether the sample is real or something the generator made up. The process is repeated until the generator can fool the discriminator with an acceptable level of accuracy.

Variational Autoencoders (VAEs) are composed of two main components: an encoder and a decoder. The encoder takes input data and compresses it into a latent space representation that preserves its most important features. The decoder then takes the latent space representation and generates new data that captures the most important features of the training data.

Transformer architectures consist of multiple stacked layers, each containing its own self-attention mechanism and feed-forward network. The self-attention mechanism enables each element in a sequence to consider and weigh its relationship with all other elements, and the feed-forward network processes the output of the self-attention mechanism and performs additional transformations on the data. As the model processes an input sequence through the stacked layers, it learns to generate new sequences that capture the most important information for the task.

Generative Pre-trained Transformers (GPTs) are a specific implementation of the transformer architecture. This type of model is first pre-trained on vast amounts of text data to capture linguistic patterns and nuances. Once the foundation training has been completed, the model is then fine-tuned for a specific use.

Hybrid variations of generative AI architectures are becoming increasingly common as researchers continually seek to improve model performance, stability, and efficiency.

For example, GPT was not inherently designed for multimodal AI. Still, OpenAI has been able to extend the large language model’s infrastructure by integrating a generative AI architecture capable of understanding images.

How Are Generative AI Models Trained?

Once the architecture for a generative AI model has been established, the model undergoes training. Throughout this phase, the model learns how to adjust its internal parameters to minimize statistical discrepancies between the model’s outputs and the data it was trained on. The goal is to minimize the loss function, the statistical difference between the model’s outputs and the data it was trained on.

Generative Adversarial Networks are trained through a two-step process. The generator network learns how to create fake data from random noise. At the same time, the discriminator network learns the difference between real and fake data. The result is a generator network capable of creating high-quality, realistic data samples.

Variational Autoencoders (VAEs) are also trained through a two-part process. The encoder network maps input data to a latent space, where it’s represented as a probability distribution. The decoder network then samples from this distribution to reconstruct the input data. During training, VAEs seek to minimize a loss function that includes two components: reconstruction and regularization. The balance between reconstruction and regularization allows VAEs to generate new data samples by sampling from the learned latent space.

Transformer Models are trained with a two-step process, as well. First, they are pre-trained on a large dataset. Then, they are fine-tuned with a smaller, task-specific dataset. The combination of pre-training and fine-tuning allows transformer models to use supervised, unsupervised, and semi-supervised learning, depending on the available data and the specific task. This flexibility enables the same transformer model to be used for different types of content.

Hybrid Generative AI Models are trained with a combination of techniques. The exact details for training a hybrid generative AI model will vary depending on the specific architecture, its objectives, and the data type involved.

How Are Generative AI Models Evaluated?

GenAI outputs need to be assessed objectively and subjectively for relevance and quality. Depending on what is learned from the evaluation, a model might need to be fine-tuned to improve performance or retrained with additional data. If necessary, the model’s architecture might also be revisited.

Evaluation is typically done using a separate dataset known as a validation or test set, which contains data the model hasn’t seen during training. The goal is to determine how well the model performs with new, previously unseen data.

A good evaluation score indicates that the model has learned meaningful patterns from the training data and can apply that knowledge to generate a useful output when given a new input prompt.

Popular metrics for assessing generative AI model performance include quantitative and/or qualitative scores for the following criteria:

Inception (IS) Score assesses the quality and diversity of generated images.

Fréchet Inception Distance (FID) Score assesses the similarity between the feature representations of real and generated data.

Precision and Recall Scores assess how well-generated data samples match real data distribution.

Kernel Density Estimation (KDE) estimates the distribution of generated data and compares it to real data distribution.

Structural Similarity Index (SSIM) computes feature-based distances between real and generated images.

BLEU (Bilingual Evaluation Understudy) Scores quantify the similarity between the machine-generated translation and one or more reference translations provided by human translators.

ROUGE (Recall-Oriented Understudy for Gisting Evaluation) Scores measure the similarity between a machine-generated summary and one or more reference summaries provided by human annotators.

Perplexity Scores measure how well the model predicts a given sequence of words.

Intrinsic Evaluation assesses the model’s performance on intermediate sub-tasks within a broader application.

Extrinsic Evaluation assesses the model’s performance on the overall task it is designed for.

Few-Shot or Zero-Shot Learning assesses the model’s ability to perform tasks with very limited or no training examples.

Out-of-Distribution Detection assesses the model’s ability to detect out-of-distribution or anomalous data points.

Reconstruction Loss Scores measure how well the model can reconstruct input data from the learned latent space.

It is often necessary to use a combination of metrics to get a complete picture of a model’s strengths and weaknesses, and the choice of evaluation method depends on the specific model’s architecture and purpose. For example, Inception Score and FID are commonly used to evaluate the performance of image generation models. In contrast, BLEU and ROUGE are commonly used to assess the performance of text generation models.

GenAI and the Turing Test

The Turing test can also be used to assess a generative AI model’s performance. This test, which Dr. Alan Turing introduced in his 1950 paper, “Computing Machinery and Intelligence,” was initially designed to test a machine’s ability to exhibit intelligent behavior indistinguishable from a human’s.

In the test’s traditional form, a human judge engages in a text-based conversation with both a human and a machine and tries to determine which responses were generated by the human and which responses were generated by the machine.

If the human judge cannot accurately determine which responses came from the machine, the machine is said to have passed the Turing Test.

While the Turing Test is historically significant and easy to understand, it cannot be used as the sole assessment because it focuses purely on natural language processing (NLP) and does not cover the full range of tasks that generative AI models can perform.

Another problem with using the Turing test to assess genAI is that generative AI outputs only sometimes aim to replicate human behavior. DALL·E, for example, was built to create new, imaginative images from text prompts. Its outputs were never designed to replicate human responses.

Popular Real-world Uses for Generative AI

When generative AI is used as a productivity tool, it can be categorized as a type of augmented artificial intelligence.

Popular real-world uses for this type of augmented intelligence include:

- Image Generation: Quickly generate and/or manipulate a series of images to explore new creative possibilities.

- Text Generation: Generate news articles and other types of text formats in different writing styles.

- Data Augmentation: Generate synthetic data to train machine learning models when real data is limited or expensive.

- Drug Discovery: Generate virtual molecular structures and chemical compounds to speed up the discovery of new pharmaceuticals.

- Music Composition: Help composers explore new musical ideas by generating original pieces of music.

- Style Transfer: Apply different artistic styles to the same piece of content.

- VR/AR Development: Create virtual avatars and environments for video games, augmented reality platforms, and metaverse gaming.

- Medical Images: Analyze medical images and issue reports of the analysis.

- Content Recommendation: Create personalized recommendations for e-commerce and entertainment platforms.

- Language Translation: Translate text from one language to another.

- Product Design: Generate new product designs and concepts virtually to save time and money.

- Anomaly Detection: Create virtual models of normal data patterns that will make it easier for other AI programs to identify defects in manufactured products or discover unusual patterns in finance and cybersecurity.

- Customer Experience Management: Use generative chatbots to answer customer questions and respond to customer feedback.

- Healthcare: Generate personalized treatment plans based on multimodal patient data.

Benefits and Challenges of Using Generative AI

The transformative impact of Generative AI is already creating new types of educational, business, and research opportunities. The impact is also raising some important concerns.

On the positive side, generative AI technology is already being used to enhance productivity and hopefully allow people to redirect their time and energy towards more high-value tasks. In research fields where data is either limited or costly to procure, Generative AI simulates or augments data and helps speed up research outcomes.

In manufacturing, generative models are used to generate virtual prototypes; in the enterprise, genAI is used to customize marketing messages based on individual preferences.

On the negative side, malicious actors have been abusing the technology to clone voices and conduct phishing exploits. Misuse of the technology is problematic because it has the potential to disrupt trust and potentially upend economic, social, and political institutions.

Critical post-deployment considerations include monitoring the model for misuse and putting safeguards in place to balance the need for progress with responsible AI.

It’s expected that many of the most popular genAI models will require frequent updates to avoid concept drift and retain their ability to produce high-quality, relevant outputs.

Will Generative AI Replace Humans in the Workplace?

Generative AI has already demonstrated the potential to transform the way people work.

Proponents of the technology argue that while generative AI will replace humans in some jobs, it will create new jobs. People will still be required to choose the right training data, and select the most appropriate architecture for the generative task at hand – and people will always play an important role in evaluating model outputs.

Many critics worry that because generative AI can emulate different writing and visual styles, the technology will eventually decrease the financial value of human-created content.

In fact, generative AI played a significant role in the recent writer’s strike in the United States. The strike lasted for nearly five months and was the longest writer’s strike in Hollywood history.

One of the critical issues in the strike was the use of AI in writers’ rooms. As AI-powered writing tools became increasingly easy to use, some studios began using them to generate and rewrite existing scripts.

Writers were concerned that using AI would lead to job losses and a decline in the quality of content.

Questions about the ownership of AI-generated content were also part of the strike. Writers argued that they should be credited and compensated for any AI-generated content used in edits of their work. Studios argued that AI-generated content is simply a tool, and writers should not be credited or paid for the use of the tool.

Ultimately, the writers and studios reached a settlement that included provisions for the acceptable use of genAI. While the settlement did not address all of the writers’ concerns, it did establish the principle that writers should have control over the use of AI in their work. It also helped to raise the general public’s awareness of the potential downsides of AI for the creative industries.

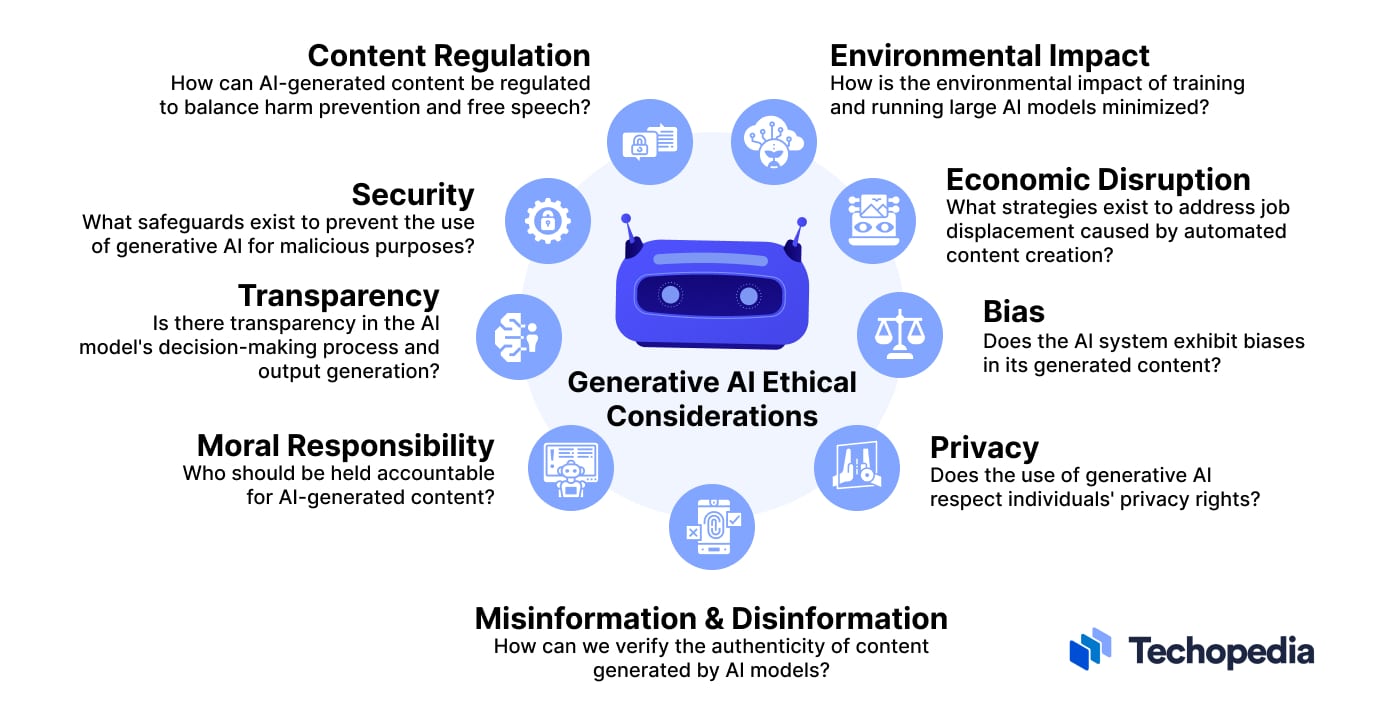

Ethical Concerns of Generative AI

The proliferation of generative AI is also raising questions about the ethical use of the technology in other industries.

One of the most disturbing aspects of generative AI is its tendency to hallucinate and generate irrelevant or incorrect responses.

Another concern is its role in the creation and dissemination of deepfakes. This type of hyper-realistic – yet wholly fabricated – content is already being weaponized to spread misinformation.

While some businesses welcome the potential uses of generative AI, others are restricting the use of the technology in the workplace to prevent intentional and unintentional data leakage.

Although integrating GenAI application programming interfaces (APIs) in third-party apps has made the technology more user-friendly, it has also made it easier for malicious actors to jailbreak generative AI apps and create deceptive content that features individuals without their knowledge or consent. This type of privacy breach is especially egregious because it has the potential to inflict reputational damage.

There’s also an environmental dimension to the ethics of generative AI because it takes a lot of processing power to train generative models. Large generative models can require weeks (or even months) of training. It involves using multiple GPUs and/or TPUs, which, in turn, consumes a lot of energy.

Even though generating outputs in inference mode consumes less energy, the impact on the environment still adds up because genAI has already scaled to millions of users every minute of every day.

Last but not least, using web scraping to gather data for training generative AI models has given rise to an entirely new dimension of ethical concerns, especially among web publishers.

Web publishers invest time, effort, and resources to create and curate content. When web content and books are scraped without permission or financial compensation, it essentially amounts to the unauthorized use or theft of intellectual property.

Publishers’ concerns highlight the need for transparent, consensual, and responsible data collection practices. Balancing technological advancement with rules for the ethical and legal use of genAI technology is expected to be an ongoing challenge that governments, industries, and individuals must address collaboratively.

Popular Generative AI Software Apps and Browser Extensions

Despite concerns about the ethical development, deployment, and use of generative AI technology, genAI software apps, and browser extensions have gained significant attention due to their versatility and usefulness in various applications.

Popular Tools for Generating Content

ChatGPT: This open-source generative AI model developed by OpenAI is known for its ability to generate realistic and coherent text. ChatGPT is available in both free and paid versions.

ChatGPT for Google: ChatGPT for Google is a free Chrome extension that allows users to generate text directly from Google Search.

Jasper: Jasper is a paid generative AI writing assistant for business that is known for helping marketers create high-quality content quickly and easily.

Grammarly: Grammarly is a writing assistant with generative AI features designed to help users compose, ideate, rewrite, and reply contextually within existing workflows.

Quillbot: Quillbot is an integrated suite of writing assistant tools that can be accessed through a single executive dashboard.

Compose AI: Compose AI is a Chrome browser extension known for its AI-powered autocompletion and text generation features.

Popular Generative AI Apps for Art

Art AI generators provide end users a fun way to experiment with artificial intelligence. Popular and free art AI generators include:

DeepDream Generator: DeepDream Generator uses deep learning algorithms to create surrealistic, dream-like images.

Stable Diffusion: Stable Diffusion can be used to edit images and generate new images from text descriptions.

Pikazo: Pikazo uses AI filters to turn digital photos into paintings of various styles.

Artbreeder: Artbreeder uses genetic algorithms and deep learning to create images of imaginary offspring.

Popular Generative AI Apps for Writers

The following platforms provide end users with a good place to experiment with using AI for creative writing and research purposes:

Write With Transformer: Write With Transformer allows end users to use Hugging Face’s transformer ML models to generate text, answer questions and complete sentences.

AI Dungeon: AI Dungeon uses a generative language model to create unique storylines based on player choices.

Writesonic: Writesonic includes search engine optimization (SEO) features and is a popular choice for ecommerce product description.

Popular Generative AI Apps for Music

Here are some of the best generative AI music apps that can be used with free trial licenses:

Amper Music: Amper Music creates musical tracks from pre-recorded samples.

AIVA: AIVA uses AI algorithms to compose original music in various genres and styles.

Ecrette Music: Ecrette Music uses AI to create royalty free music for both personal and commercial projects.

Musenet: Musenet can produce songs using up to ten different instruments and music in up to 15 different styles.

Popular Generative AI Apps for Video

Generative AI can be used to create video clips through a process known as video synthesis. Popular examples of generative AI apps for video are:

Synthesia: Synthesia allows users to use text prompts to create short videos that appear to be read by AI avatars.

Pictory: Pictory enables content marketers to generate short-form videos from scripts, articles, or existing video footage.

Descript: Descript uses genAI for automatic transcription, text-to-speech, and video summarization.

Runway: Runway allows users to experiment with a variety of generative AI tools that accept text, image and/or video prompts.